Letting go of control

What agentic AI can teach Martech about trust, autonomy, and actual intelligence

I used to think I understood AI agents. In Martech, we talk about them all the time. Tools that take action based on data, automate tasks, score leads, and optimize send times. That’s what we call an agent, right? A helpful script or model that executes a narrow task faster or more accurately than humans can.

Then I had a conversation with Aampe’s CEO Paul Meinshausen on my podcast Couch Confidentials, and something clicked. Paul introduced a distinction I hadn’t fully grasped. An AI agent follows instructions. Agentic AI operates with goals, persistence, and the ability to revise its own strategies. That revelation made me realize I’d been clinging to something familiar that would be critical to move further: control.

Most marketers are comfortable with automation. We accept AI that assists, even when we don’t fully understand its inner workings. But agentic AI makes decisions. It changes tactics. It remembers what it’s done and adjusts accordingly. That goes beyond tooling. It introduces a kind of relationship. And that’s where it gets uncomfortable.

Paul reframed the issue for me. This is no longer about technical feasibility. Surprise, surprise, it is about leadership. Are we prepared to delegate meaningful decisions to AI systems? Can we trust something to act even when we’re not watching every move?

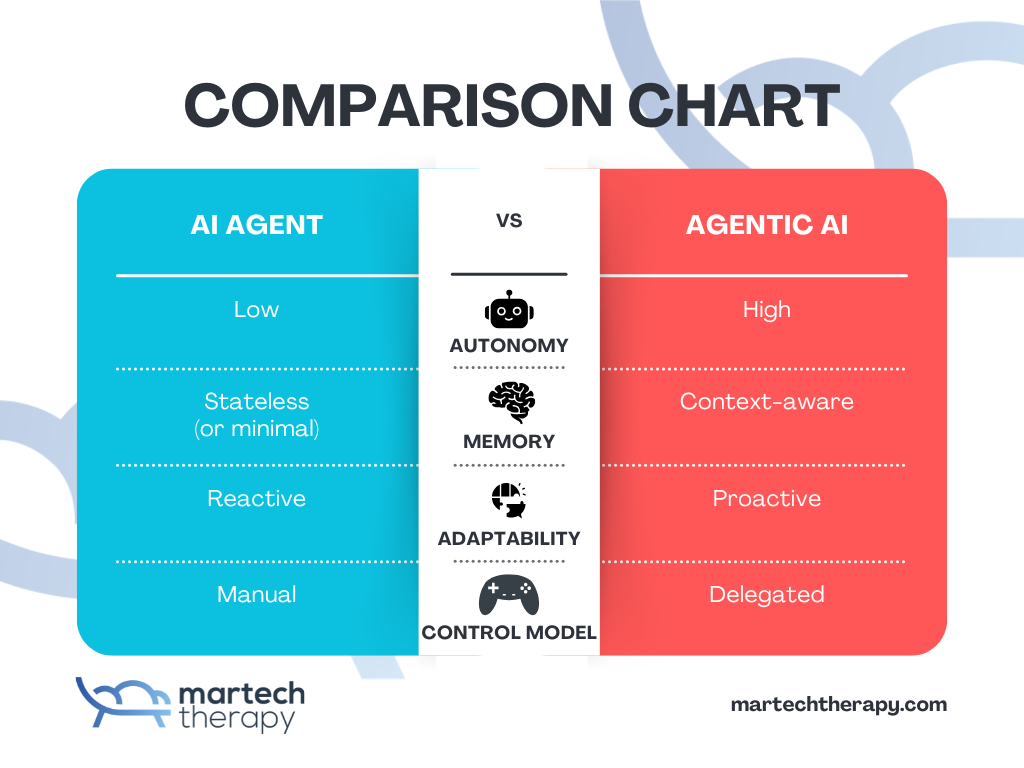

To understand why this change in perspective feels so significant, at least to me, it helps to ask what makes agentic AI different under the hood. Traditional AI agents are optimized to execute predefined actions based on a limited set of conditions. They don’t learn across sessions or revise their approach unless a human retrains the model.

Agentic AI, on the other hand, builds upon architectural elements such as memory, multi-step planning, and sometimes reinforcement learning. These features allow it to do things like pursue open-ended goals. Evaluate outcomes over time and then adjust strategies based on performance.

In short, it reasons, not like a person, but like a system designed to operate persistently across continuously changing contexts. And reasoning is what makes it feel risky. We’re no longer dealing with a tool that does what it’s told. We’re working with something that may choose how to help.

This is where the fear creeps in. Not because the AI will fail, but because it might succeed, in ways we didn’t predict or control.

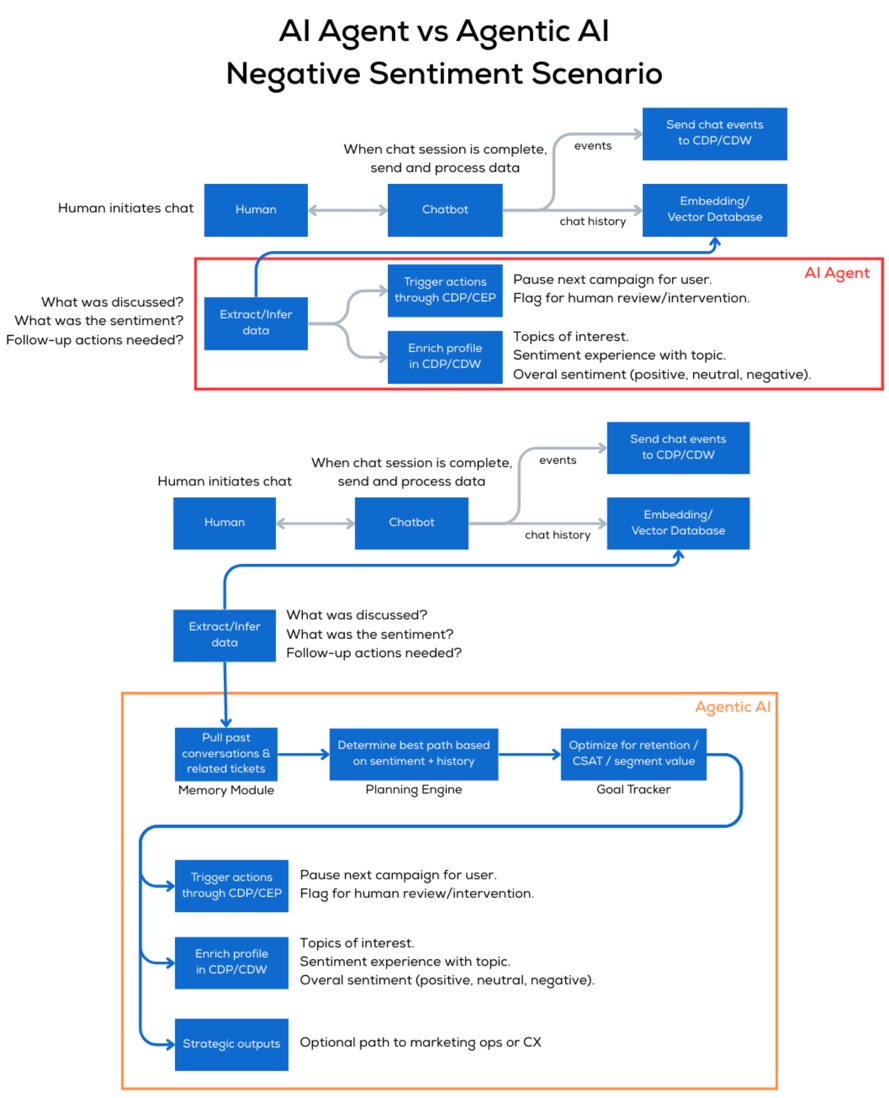

A good analogy, other than the above displayed chatbot example, came to me while driving in my relatively modern electric vehicle. A GPS gives directions, will update based on traffic, and the car’s software can keep you in your lane and keep a safe distance from other cars, but you’re still in control. If you don’t take the exit, you have a problem. The car won’t correct your error. Of course, you can ignore it, disable driving assistance, take a detour, or stop for coffee. That’s an AI agent. But a self-driving car? That’s agentic AI. It doesn’t just advise, its purpose is to act. And trusting it to get you there safely means giving up the wheel, at least partially. That’s where most of us hesitate, especially when it comes to our business.

That anxiety is familiar to anyone who’s built a team. Early on, founders do everything themselves. Messaging, pricing, outreach, operations. Control is tight because there’s no other choice. But as the company grows, control becomes a liability. You have to delegate. You have to let people own decisions. You have to trust the team to deliver on outcomes you care about, even if they approach the problem differently.

Agentic AI asks us to revisit that same leadership question:

“What are we willing to let go of in order to scale what we care about?”

In Martech, this question is already surfacing. Some systems adapt campaigns based on intent signals. Others reshape customer journeys without anyone manually triggering those changes. These systems are showing the early traits of agency. They aren’t just reactive. They’re persistent, context-aware, and increasingly able to handle ambiguity.

This doesn’t mean handing over control entirely. It means establishing clear parameters and then allowing systems to operate within them. The role shifts from micromanager to designer. We build the framework and let the AI navigate within it.

The most significant change we need to address is cultural. Our current Martech systems are built around explicit instructions. But if we want to work with more autonomous AI, we need to embrace a new mindset. One that values outcomes over rigid control.

“What might that look like?”, I hear you asking. To help get you started, consider these three steps:

Define the scope of decisions we’re comfortable outsourcing

Create evaluation methods that assess judgment, not just output

Think less about control and more about cooperation

That change won’t come easily, and I expect resistance from leaders worried about their positions. But it’s necessary if we want AI to move from utility to partnership in the real world and stop hiding behind hypotheses.

If you’ve found yourself unsure about what separates agents from truly agentic systems, you’re not alone. I was in the same place until I asked a better question. Not what this AI can do, but what I’m willing to let go of.