Agentic AI in Martech: The billion decision problem

Part 1: Why scale changes everything about AI in Martech

I've been having a strange, recurring thought lately. It started after my conversation with Paul from Aampe, when he mentioned their AI agents handle somewhere between 15 and 200 billion decisions every week.

I love astronomy and the awe-inducing scale of the universe. Nevertheless, that number still amazed me, probably because I couldn't quite wrap my head around it. So I did what any reasonable person would do. I grabbed a calculator and started doing some deeply unnecessary math.

Let's say you're a marketing ops manager making about 50 meaningful decisions per day. Which segment gets the email blast, what time to send it, which creative variant to test, and whether to suppress that angry customer from the promotion. Fifty decisions feels reasonable, maybe even conservative.

At that pace, you'd make roughly 18,000 decisions per year. To match what Aampe's systems do in a single week, let's use the conservative 15 billion figure. You'd need about 833,000 years. If we go with their upper range of 200 billion weekly decisions, you're looking at 11 million years of human decision-making compressed into seven days.

For context, 11 million years ago, our ancestors were still figuring out how to walk upright.

This feels like more than a productivity improvement. This feels like crossing some kind of threshold, a black hole’s event horizon, if we’re sticking to the astronomy analogy, where the old rules stop applying entirely.

Which got me thinking about what happens when we reach that threshold. Over the next few posts, I aim to explore how marketing teams are actually working with, or considering working with, agentic AI systems. Not just vendor demos or conference presentations, but the seriously messy reality of designing around systems that operate at scales we can't directly observe. I'm genuinely curious about this space (pun intended), and I suspect I'm going to learn as much from writing about it as anyone learns from reading it.

When volume breaks everything

I've also been thinking about this in the context of Paul's subway map metaphor. He described how traditional marketing automation works like the New York subway map → simplified, predictable, designed by experts who compressed complex reality into something humans could navigate. You can look at that map and trace exactly how you'll get from Brooklyn to the Bronx.

But what happens when your system is making billions of routing decisions every week, each one slightly different based on real-time context that changes faster than any human could track?

You end up with something more like Google Maps during rush hour in a thunderstorm. The system considers traffic patterns, weather conditions, construction updates, and probably a dozen other factors you might never think to include, such as someone’s preferred route or an extra stop for an unexpected errand. It's making constant micro-adjustments based on information streams that would overwhelm any human trying to follow along.

The difference is that with Google Maps, you can still see the route it's taking. With agentic AI in Martech, you're often flying blind at 30,000 feet while the system handles everything below the clouds.

The governance illusion

Here's what's been bothering me about most conversations around AI governance in marketing. Sorry, I just need to vent some steam. Bear with me. We need to really look beyond the fanfare and overnight self-proclaimed AI gurus, I won’t name names. We're still designing oversight frameworks as if humans can meaningfully monitor these systems. Board presentations still include slides about "human in the loop" controls and approval workflows.

But how exactly do you audit 15 billion decisions? Even if you could review one decision per second, working 24 hours a day with no breaks, you'd need about 475 years to get through a single week's worth of choices. And by then, the system would have made roughly 2.4 trillion more decisions while you weren't watching. I mean, come on… 🤯

This mathematical reality suggests we might need to rethink what governance means when systems operate at these scales. Traditional approval processes become impractical. Audit trails become overwhelming. The very concept of human oversight starts to bump against basic math.

Beyond human comprehension

What strikes me most about this scale problem is how it challenges basic assumptions about control and responsibility. If your agentic AI is optimizing customer journeys based on real-time sentiment analysis, behavioral pattern recognition, and contextual signals across dozens of touchpoints, the resulting decisions exist in a space that's beyond human intuition.

A marketing manager might understand why they chose to send an email at 14:00 (or 2 PM for my US readers) on Tuesday. But how do you understand a system that's simultaneously optimizing send times for millions of individual customers based on their personal engagement patterns, current emotional state, recent purchase behavior, and predicted life events?

You can't. And maybe that's the point.

According to Paul, agentic systems don't just execute plans; they develop their own strategies (hence my reference to the movie Wargames above, tic-toc-toe FTW 🎉). They learn what works and adjust their approach accordingly. In some ways, they're less like tools and more like extremely fast, extremely focused employees who never sleep and never forget anything.

Except these employees are making decisions at a volume that exceeds human comprehension, using context that changes faster than human analysis, optimizing for outcomes across timeframes that span microseconds to months.

The uncomfortable questions

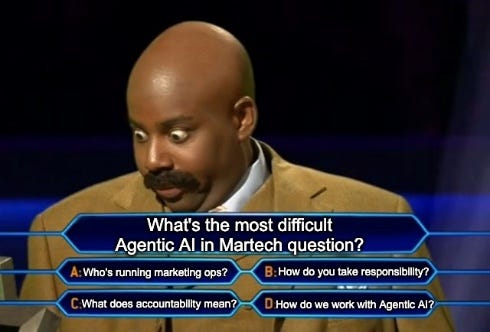

This scale reality forces some uncomfortable questions that I'm still working through. Are you ready? It’s time for “Who wants to be a Martech-millionaire?” 👇🏻

If your AI makes 200 billion decisions while you make 250, who's really running your marketing operation?

When systems adapt their strategies faster than humans can evaluate them, what does accountability even mean?

How do you take responsibility for outcomes you literally cannot track back to specific decisions?

I don't have answers to these questions yet. I am not exactly the Nostradamus of Martech. However, I suspect they're the right questions to ask as more teams experiment with agentic systems.

The Martech industry loves to talk about AI as an enhancement to human capabilities. But when you dig into the actual numbers, it starts looking less like enhancement and more like a fundamentally different approach to marketing operations.

I'm starting to think the interesting question might not be how to control these systems, but how to work with them. That requires rethinking some basic assumptions about oversight, delegation, and what collaboration looks like when your AI partner operates at inhuman scales.

I'm still figuring out what that looks like in practice. But I'm pretty sure it starts with accepting that some problems are too big for human-scale solutions.

And 200 billion weekly decisions definitely qualifies as too big.

What's your experience with high-volume AI decision-making? Are you seeing similar scale challenges in your Martech stack? I'm curious whether other teams are grappling with these governance questions or if I'm overthinking the math.

👥 Connect with me on LinkedIn:

📲 Follow Martech Therapy on:

Agree, Auditing millions decisions and AB testing is not scalable and would not make sens. What if the governance emphasises more on guardians and what really goals/outcomes means, which is more strategic and human-led (for now) and let the orchestration and testing simply led by AI. There is still lot of work at optimising what a goal is, very often too high level. That one of the area that can be further optimised thanks to these agentic ai systems IMO.